Discuss the Application and Development of IoT in the Mobile Broadband Era (2nd)

Author/Boyuan, su [Issue Date: 2014/8/26]

As the former paper mentioned, the possible evolution of a Software-Defined Data Center is as a back-end infrastructure for the IT resource applications. As to usage of the Internet of things, in mobile broadband, it is just a matter of reducing cost and complexity, and responding efficiency to ways for improving infrastructure according to IT and business requirements. . In addition, the maturity and development of two technologies will be directly related to our ability to make an ideal and ubiquitous mobile application for the Internet of things. One is a mobile SON (Self-Organizing Network) and the other is Big Data.

Issuing 4G licenses and introducing 4G has officially got Taiwan into the mobile broadband era. Substantial growth of wireless access broadband however does not mean there are unlimited resources. In particular, the application of broadband will rapidly escalate with tremendous consumption in the forms of high-resolution video streaming, file uploading and downloading, large numbers of small packets’ to deliver to Internet of Things related applications for controlling information exchange, etc. If the entire basic construction doesn’t have a complete emergency mechanism and management mechanism, the increased theoretical value of broadband or carrying capacity of LTE will not catch up with broadband consumption of Mobile bandwidth and the inherent limitation of the network and technology will rapidly be used up from the wireless last mile to the network's backbone. The challenge to achieve the goals, improve users’ satisfaction, and create a new source of revenue is not unlike a nightmare.

Expansion of broadband mobile network and promotion of industrial development and upgrading is already an important basic issues in the further development of the mobile network applications.This includes providing more convenient smart services through the application of technology and optimization of the quality of the mobile network. Further, reducing the operation complexity in terms of personnel and maintenance cost.

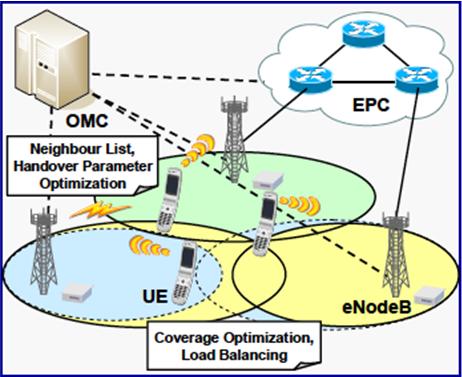

Picture 1: LTE Mobile Network with SON mechanism (Data source: NEC)

The Organizational Partners 3GPP, responsible for the formulation and implementation of the global mobile phone system specification, the standardization of technical specifications, and communication protocols, began to discuss SON (Network Self-Organizing) starting with the relevant Release LTE 8. The goal is to achieve self-Configuration , self-Optimization , Self-Healing, and more to reduce the complexity and cost of smart mobile networks.

I. Self Configuration:

It’s applied to newly-added base station devices that can achieve Plug-n-Play during network construction. Parameter and software can be downloaded automatically. Settle equipments fast to operate with minimal human resource. The main functions such as Automatic Neighbor Relation (ANR) and Automatic Configuration of Physical Cell Identity (PCI), are mostly for dealing with the automatic correlation of cross-platform devices when the base station settles, related parameters matching and communication processes being established.

II. Self-Optimization:

Network can adjust parameters or configurations automatically according to the current operational situation in response to congestion, interference, and other phenomenon in order to optimize efficiency. For instance, Random Access Channel Optimization, Mobility Load Balancing, Mobility Robustness Optimization, Coverage and Capacity Optimization, Interference Control, Energy Savings and so on.

III. Self-Healing:

3GPP defined the self-healing function in SON in response to service interruptions caused by hardware or software failure. Such as through the defined KPI condition, Cell Outage Detection and Cell Outage Compensation can monitor adjacent Cell configuration and the Cell cover method to compensate when disorders occur in user service, such as transmission power adjustment or antenna angle correction, etc.

In Release 3GPP 11, further standardization of for providing more accuracy of a users’ efficiency and response to the information to optimize mechanism for the network, including up-and-down link traffic volume, per subscriber QoS, wireless link interruption or signal failure message return, initialization of request location data return from users.

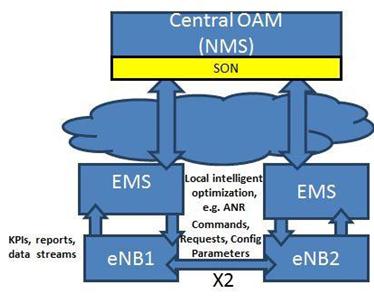

Given the concept of SON mentioned above, at the time of deployment, it would be possibly to assess according to actual deployment needs. These are distinguished as centralized SON, distributed SON, and hybrid SON for Handling with the functions such as related monitoring, load balancing and parameter adjustment as shown in the following Figure 2 .

In the framework of a centralized SON, a unified OAM management platform will manage communications among eNodeB. The coordination is mostly the regular adjusting of parameters to the eNodeB. However, the disadvantage is that the results need to be transferred to the back-end after being measured through KPI or monitored from UE. After that, it could response to the adjustment according to the set conditions; the result is that the speed of system adjustment will be relatively slow.

Picture 2: Diagram of centralized SON network framework (Data source: 4G America, “Self-Optimizing Networks in 3GPP release 11”)

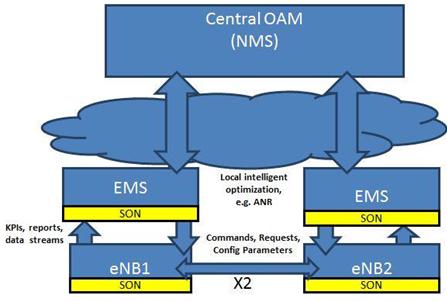

In the distributed SON framework, the primary adjustment mechanism is in each eNodeB. Authorizing the right of autonomy helps tdeciding how to adjust the system according to the measured UE by eNodeB itself and received status indicators by other eNodeB (through the X2 standard interface). This can be applied to a multi-vendor network environment and provides more rapid adjustments. But if it is applied in heterogeneous networks from multiple vendors, more care is needed to deal with the methods and standards of calculation and evaluation for related parameters in KPI among the manufacturers. Otherwise, if care is not taken, the SON may be hard to implement because of differences in mechanism from different suppliers.

Picture 3: Diagram of distributed SON network framework (Data source: 4G America, “Self-Optimizing Networks in 3GPP release 11”)

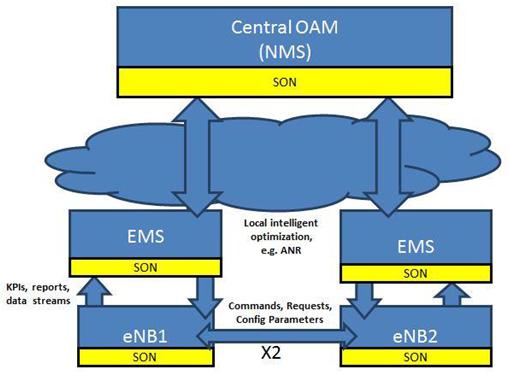

Deployment of the centralized and decentralized approaches, in practice, is not mutually exclusive to each other. In the framework, a part of the optimization can be dealt with by the centralized system and other properties of adjustment mechanisms can be handled by eNodeB for local implementation — then this forms a hybrid SON. For example, the initialization in the initial stage would be set by the centralized system, yet, the adjustment and monitoring of state parameters of eNodeB and user UE connections can be produced by autonomous adjustment mechanisms which are located in the eNodeB.

Picture 4: Diagram of Hybrid SON network framework (Data source: 4G America, “Self-Optimizing Networks in 3GPP release 11”)

The application of the Internet of Things depends on substantial networking devices to collect a large amount of data. To maximize the value of relevant applications, they all need to send this data to centralized or decentralized processing platforms through wired or wireless before they can begin further analysis or Value-added services after necessarily data processing.

In many situations, the application of the Internet of things will gather data through different network access methods. For example, in the traffic application field, some data from satellite positioning systems and inside or outside of cars’ camera sensors will be transmitted through mobile broadband networking devices via mobile carriers. A part of the data may possibly return through the road or gateway sensing devices, which are connected to the outdoor network (such as outdoor switch). Further, some operational data may be handled by mobile base stations in cities or signal intensity changes of indoor and outdoor micro-base stations may be used to calculate crowds, traffic, driving speed, and location information. There are also a wide variety of data sources and data formats needing to be integrated and processed.

When massive and ubiquitous deployments and frequent data exchange become common features of the Internet of things , the data density, and diverse format , and speed of data generation, will truly introduce unseen levels of data. And with different uses, big data systems will be created and may be in house residing in computer facilities or become a part of the cloud. If the Internet of things becomes more popular and a part of daily life, then big data applications in cloud computing form will be needed with will bring lower maintenance cost and a higher level of elastic expansion.

Although the problems that caused the rise of Big data came about not that long ago, the spirit of Big Data is not an entirely new concept. In the past, when an application's scale spread to nationally in the execution of a core business , perhaps large government projects or financial institutions, there were many commercial solutions that offered viable means to handle that sort of big data even when fast response capability was needed. Internet e-commerce, for instance, which has matured in recent years, has online transactions and cash flow requirements that came face to face with earlier challenges of Big Data and which have already expanded today's business opportunities for big data processing. These include data warehousing technology, development of MPP (massively parallel processing), and technology for speeding up large data processing, which has been put forward by each of the large commercial database. Most of them focus on structured data processing, for application of commercial part, there were core technologies for data mining in response big data analysis and other hot topics. However, such kind of large commercial products are very expensive and for unstructured data there was a lack of solutions. So the application range has been limited. Business opportunities were confined to a few VIP clients or manufacturers.

In recent years, due to the rise of super large data centers and social networking sites with millions of users, like Google、and Facebook, to handle users’ query and data processing requirements on a global scale, the data processing model based of traditional relational database has struggled to cope, so related industries and organizations began to develop new technology and a new framework for big data processing, especially MapReducec published by Google and related papers of Google's file system, have emerged and can be regarded as innovations coming from the Big data processing technology. Based on related research, the open source software framework of Hadoop system published by Apache Software Foundation, could achieve a horizontal expansion framework by using a large number of distributed architectures to handle storage and computing, and could improve the overall system efficiency instead of being limited to a single-point efficiency. There is no need to worry about Single-Point-of-Failure and limitations of structured datas. All kind of data formats can be processed through the MapReduce program in the Hadoop file system and via various operation nodes for outputting the results. Hadoop satisfies all the requirements needed by big data applications. What's more, the software framework proposed by Hadoop Apache isn't limited by its design framework. It's based on a basic platform and adopts the popular X86 servers and generally available operating systems for data storage and computing clusters. Compared to well-known solutions from big name providers in recently years, cost will be significantly reduced in hardware and software in dealing with the same amount of data.

Picture 5: basic component of Hadoop Apache (Data source: IEK)

Because of the continuously evolving and expanding industrial ecosystem based on Hadoop, many traditional data integration and data processing companies with their products and technologies, or emerging providers, are joining the Hadoop community to develop commercial solutions which can be integrated with Hadoop APIs. Some Manufacturers like Cloudera, Hortonworks, MapR, IBM and HP, are working on Hadoop commercial releases and professional data analysis consultant service. Big customers are continuously working on big data projects worldwide.

In view of the current industry situation in Taiwan , we face a huge amount of data application challenges based on Hadoop technology that relatively few other advanced countries share, mostly the technology industry has developed and manufactured hardware for many years, but in the commercial software market both and talents and leadership is missing. Most in this sector are focused on using tools only within an intuitive scope of limited to writing apps, and with a complete lack of any comprehensive core capability (such as mathematical algorithms, general rules of induction, and so on). The underlying software like the tools used by the open source software framework and interface to implement the commercial operation platforms, especially like Hadoop, should in fact be plentiful in the field of software engineering , not to mention those working on data analysis applications in science and industry, but industrial in Taiwan ignores the basic research and lacks the needed talents. We do hope that the academic community and the nation-at-large will make moves toward deeper cooperation. Enterprises should also move themselves away from the narrow market competition, establish collaborative relationships with various partners, use of young, creative and highly flexible human resources, or even work to attract international talent. Looking at narrow short-term business opportunities results in inert enterprise organizations . This makes it very difficult to use these new technologies for commercial innovation in a reasonable period.

It is not easy to develop the associated capabilities of big data applications, but from a global perspective, this will mature quite fast. When considering specific applications, the relevant commercial interests are quite clear. Furthermore, capacity is almost infinite. The problem is whether there are professionals with the necessary talents to properly implement or not. The challenge is great, but it deserves our attention and actions to overcome the difficulties .

The activation of LTE service in 2014 can only be regarded as a beginning. With the development and perfection of mobile broadband standards, technology, and solutions, suppliers will rely more on supporting them and popularizing the application of this technology even including the hotly debated 5G . Barring overall costs not rise significantly, which is beyond our control, there will be more opportunities for more commercialization of investment in basic construction and high-value-added applications. From a positive perspective, after 4G the wireless access broadband with capabilities of carrying the provided spectrum will be areal mobile broadband, and then based on this broadband, there will be virtually unlimited space for the development of applications. There are opportunities for Taiwan to achieve industrial transformation and new talent development through the creation of demand and project proposals. But this depends on the determination of our entrepreneurs. The change in development will not occur naturally because of the issuing of 4G licenses, the impetus of government, investment in industry and academia, the determination of pioneers — each variable affects the trend influencing national development. Whether all parties will be shortsighted or play for a long-term success is not known.